Social networks enhance the good and the bad of our societies. But algorithms are cunning and they know, as well as the media, that the bad outsells the good. After all, nobody would buy a newspaper that ran positive frontpages all the time.

Hate is profitable for networks, because it is the type of content that "engages" users the most – upping the clicks, views, subscriptions and other interactions that are draped with advertising cleverly marketed by Google and Facebook. Governments, judicial courts and civil organisations no longer know how to stop this chain reaction. Uncontrolled hatred metastasises in our society.

In our previous article, we focused on two hate groups that targeted very different niches: the Nueva Soberania group and the TLV1 YouTube channel channel. One used Facebook and Instagram (both social networks belong to Mark Zuckerberg's tech giant) to target their communications to young people and adolescents, prioritising imagery. The other aimed for a privileged Latin American segment, those aged more than 40, and did so by copying the conventions of a traditional television channel and broadcasting on YouTube, which is owned by Google.

Trying to get the technological giants to remove this type of material from their platforms is practically impossible. And this is speaking from experience.

To achieve this purpose, as you’ll see, we had to create our own algorithm to analyse and effectively corroborate charges of anti-Semitism and incitement to violence on these channels.

Audience and algorithms

TLV1 has 190,000 subscribers, counting 33 million views from around 3,000 videos over an eight-year history. Type in its name and with just one click, its videos begin to play. There is open hatred of Jews, an anti-vaccine fixation and obvious calls for violence or uprising against government institutions.

The algorithms of the tech firms suggest groups and materials that the platforms detect as liked by similar users. So if they follow, for example, a neo-Nazi group, Facebook and Google will show them more. It will help them to increase their hatred by making more similar content available.

The first article in this series, first published in Spanish on November 22 in the Perfil newspaper, helped to raise awareness of these groups. Sure, the information was public before, it wasn’t easy to find, but it was inside a bubble.

After the story was published, the two companies offered staff to help with the case. The first obstacle was presented in the way that these companies evaluate what a "hate group" is.

At YouTube Argentina, they pointed out that they did not see anything strange on the TLV1 channel, although they said they had already received complaints about their videos.

We decided to warn the platforms that we would go to court and take action.

The whole world is debating precisely how platforms should deal with information — and misinformation, misnamed “fake news” — and to what extent they can “censor” certain content. Clearly there are things that, from an ethical point of view, exceed any threshold, such as child pornography and incitement to commit violent crimes. But, to what extent should Google and Facebook remove malicious content that, for example, Donald Trump publishes in search of political gain? And it is just in this limit where the tech firms are supported, to appeal to freedom of expression and to oppose a move to moderate certain content, appealing to the “no responsibility of intermediaries.”

In the background, at the same time, there is an economic issue: the more people consume more content on YouTube, Facebook, Instagram and the rest of the networks, the more money the barons of Silicon Valley earn.

But, in the face of a legal claim, such as a copyright violation for an illegal stream of a football game, they do act. After a warning, Google and Facebook remove the videos and posts clearly detected as discriminatory before the courts can see the evidence.

To the right: Messages sent to the author of the article after the release of his first article.

Lesson one

YouTube bans a channel if it receives three valid reports within 90 days – in other words, if a channel publishes a hateful video every 91 days, the platform will keep the channel and only delete the video.

The Argentine justice system, meanwhile, asks if these were hate groups or if you only had two or three videos where one or another “unfortunate phrase” slipped through. With such limited human resources, who in a courtroom would watch 3,000 hours of video to reach such a conclusion?

Although, for example, in the City of Buenos Aires there is the 22nd Prosecutor's Office, headed by Mariela de Minicis and specialised in discrimination, there is not a single ruling on the matter related to digital platforms. It is a complicated issue for the justice system, which hasn’t managed to get to grips with the issue.

In a controversial ruling known as ‘Belén Rodríguez,’ the Supreme Court accepted that digital platforms are intermediaries, but considered that they had a responsibility for the dissemination of information, the same as a media or publishing company. However, although the legal framework for such cases exists, the slow wheels of justice make it practically impossible to stop content that violates the law from going viral quickly. Platforms should have hundreds of editors controlling what content they are distributing.

Two additional fears assailed us: First, if the justice system moved fast enough and then the platforms appealed any resolution until the last instance (the Supreme Court) – who would back us all that way? Second, Would we run the risk of causing the opposite effect and helping these groups strengthen their positions as “victims of censorship”?

YouTube, for example, clarified that it was not going to spend the resources necessary to check 3,000 hours of video and that its position was "to defend freedom of expression." In other words: “freedom of expression” was guaranteed as soon as the video was published and the platforms are not the guardians of such rights, that is the realm of laws and judges. Thus, defending freedom of expression works as an excuse to avoid the work and financial expense of detecting and removing these materials.

The argument is fallacious: the large platforms spend hundreds of millions of dollars a year to create automated systems that detect copyright violations, as well as the appearance of child pornography or content that shows mutilations. Why is it so hard for them with hate groups?

Lesson two

Before hunting down a hate group, it’s better to assume, in some way, that the platforms somehow "are them" or are going to protect them.

It should be noted that neither of the two platforms have a person in Argentina for these cases, everything is taken through their PR people. those who try to mediate and at the same time raise the level of the case internally. Countries with little relevance like ours only have personnel linked to the sale of advertising.

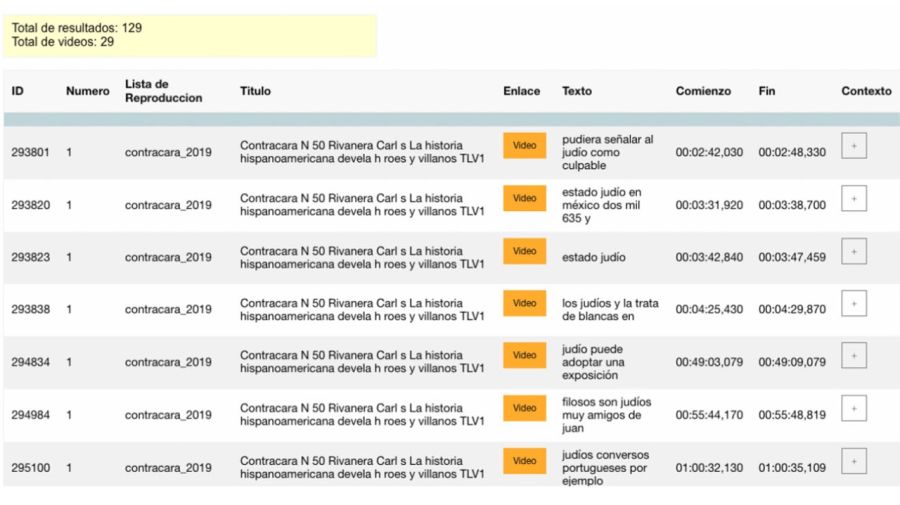

The first barrier had to be jumped. Going from “unfortunate phrases” to demonstrating the existence of a “thematic axis” would be needed if we were to show that we were facing a hate group. We were forced to answer with data. From the 3,000 videos on TLV1 YouTube channel, we took a playlist of 73 videos, at one-hour each. Subtitles were automatically generated for each video, so as to later insert them into a database, relating each line of text with the exact minute and second of the recording to be able to check and verify, later, any result visually.

We asked this database for example: how many times was the word "Jew" mentioned in the 73 hours of recording? Result: 129 matches in 29 of the 73 videos.

Although the word Jew is not offensive, from experience it is the one most likely to be used with hate motives within a group of these characteristics.

A lawyer specialised in hate groups, Raúl Martínez Fazzalari, manually verified, one-by-one, the contexts and whether the entire sentences represented a crime or not. Of the 129 mentions of the word "Jew" he found that 30 were stigmatising or discriminatory in content. In them, a "Jew" is directly linked with finance, world domination, and control of companies. The expressions are framed in supposed classes or comments from experts or speakers. If we take the totality of the mentions, it is evident that there is a cruelty in some specific way to discredit, discredit or stigmatise a group or specific individuals.

In 40 percent of the videos, the word "Jew" was mentioned at a rate of 4.44 times per hour. We had managed to search, in a second, terms that would lead us to hate speech, using data and checking only the matches.

Once this playlist, which represented 0.02 percent of the total material we had, had been analyzed, we published it on the Lanata Sin Filtro programme on Radio Mitre and we informed the platforms that we had the complete database at their disposal.

YouTube took down the TLV1 channel, with 190,000 followers, the next day.

Once TLV1 was closed, messages began to circulate through the platform's networks and other sympathetic groups, signs of support for the "censorship by JewTube," as they called it in a Telegram group.

When realisation that the channel’s videos were unreachable spread, individuals began to send me intimidating messages on social network platforms. (Author's note: The accounts are still open at the time of writing.)

Then they began to spread my identity and my photo around among the group. The facism and anti-Semitism swamped my social media accounts.

Lesson three

There is still no cross-platform "hate management" protocol.

Google’s decision to close the TLV1 channel was not enough for Facebook. Their accounts stayed on that platform. The group used them later to reorganise their troops and launch personal attacks against me.

But even more surprising was when a TLV1 “sleeper channel” appeared on YouTube. Called “TLV1 Historical,” it had only 4,000 subscribers but featured a video spreading insults against Perfil founder Jorge Fontevecchia and Jorge Lanata, the host of the radio programme that aired the story. We were all accused of being part of or responding to the “Jewish lobbies” that operate entrenched in Argentina. We were accused of being "political operators" to the detriment of freedom of expression.

Fearing direct physical attacks, we communicated our fears to Facebook and Google that the lack of a protocol against hate speech and threats had exposed us, since the coordination behind the threats was coming directly from their platforms.

Initially, there was no response. But then, when we broadcast the audio of the YouTube video which referred to us live on air on Radio Mitre, a secondary channel called TLV1 Archivo was, within two hours, shut down.

Lesson four

What hurts platforms the most are public denunciations of their bad practices. Facebook-Instagram remained unmoved. As was the 22nd Prosecutor's Office. Meanwhile, TLV1 recovered from the blow by using Facebook instead.

We decided to double the bet. We ingested 10 times more videos than in the first sample, reaching a database of one million lines of text and 740 hours of video from the channel in question.

Our initial goal is to find as many phrases as possible that involve hateful attitudes to document our work. But above all, it is about getting the platforms and the justice system to take control and act.

It is not so difficult to recognise hate speech and groups. By using the tools at our disposal, we can prevent, or at least limit, their spread.

To collaborate with our investigation, click here and help us detect the phrases that constitute a crime.

Thank you for reading. For more information, visit https://investigacion.perfil.com.

---

This article is the second of two that originally appeared in the Perfil newspaper and has been translated and edited. It can be read in the original Spanish here. The first article in the series can be read in English here.

Comments